With a good computer you can make anything – inside sick world of deep fake porn plaguing celebs & other innocent women

Hundreds of celebrities have been caught up in vile ‘deepfake’ content

IT is the scourge of celebrities around the world – a degrading digital sex assualt.

AI technology is being used to create videos of women in graphic sex ‘films’ and then sold online.

5

The Sun was able to make a deepfake video of feature writer Grace Macaskill in TEN MINUTESCredit: The Sun

5

The reporter’s face was merged with existing footage depicting an Oscar winnerCredit: Getty

Hundreds of celebrities have been caught up in vile “deepfake” content including singer Taylor Swift and actresses Angelina Jolie and Emma Stone.

But it is not just the rich and famous who have fallen victim, with bogus footage of thousands of innocent females popping up on the internet.

Many victims are oblivious to the existence of the perverse images and clips.

Laws were introduced this week making it illegal to create sex deepfakes — which are images or videos that have been digitally altered with the help of artificial intelligence to replace the face of one person with another.

Offenders face an unlimited fine and could even be sent to prison.

But campaigners fear the legislation does not go far enough, and that this is the new frontline in revenge porn.

If you have a graphics card and a very good computer you can pretty much create whatever you want

Sun Video Producer Ben Malandrinos

Terrifyingly, The Sun was able to make a deepfake video in just ten minutes.

We downloaded a free app to merge our reporter’s face with existing footage of an Oscar winner.

Users can sign up to view content for as little as £16 a month.

Sun video producer Ben Malandrinos said: “If you have a graphics card and a very good computer, you can pretty much create whatever you want.”

We also found that some of the country’s biggest names, from Holly Willoughby and Michelle Keegan to Amanda Holden and Susanna Reid, are victims of disgusting AI-generated videos.

Creating deepfake porn to be made illegal after hundreds of celebrities targeted

Our trawl of one of the most famous deepfake sites in the world — which we are not naming — found 26 well-known British women, including sports stars, actresses and authors.

‘Victims left suicidal’

Even the Princess of Wales’ face has been used to create creepy sex films.

But campaigners worry that a “loophole” in the new laws could see court cases collapse without a conviction, if they ever get that far.

5

Taylor Swift is one of the most well-known celebs to have been targeted with sick deepfake porn vidsCredit: Getty

5

Angelina Jolie has also been caught up in the vile contentCredit: Getty

The prosecution will have to prove the creator of the material meant to cause distress — something critics say will be hard to substantiate.

Big tech has also been accused of failing victims, despite a promise to control false content.

Professor Clare McGlynn, a legal expert in pornography, said of the legal shake-up: “It’s a bold step by the Government but doesn’t go far enough.

“The only motive that will matter with the new law is whether someone was trying to cause malice.

“We know from previous laws about non-consensual sharing that proving distress is a challenge for police and puts off prosecution.”

What we know from previous laws about non consensual sharing is that proving distress is a challenge for police

Professor Clare McGlynn

Channel 4 news presenter Cathy Newman, one of the few celebrities to talk about being targeted, said the loopholes needed “tightening up.”

She said “There are two main issues. Really, this is just legislation in the UK and a lot of this deep fake is not made here, so wouldn’t be covered by this new law.

“So you need global action on this. And most of all, you need big tech to take responsibility for this abuse that they are facilitating.”

Former Love Island contestant Cally Jane Beech, who campaigned for changes in the law, told how she was left feeling “extremely violated” after being targeted in vile fake images distributed on social media.

She told a Parliamentary roundtable: “My underwear was stripped away, and a nude body was imposed in its place and uploaded onto the internet without my consent.

5

Former Love Islander Cally-Jane Beach spoke to a Parliamentary roundtable and told of how she felt ‘extremely violated’ by deepfake videosCredit: Getty

“The likeness of this image was so realistic that anyone with fresh eyes would assume this AI-generated image was real, when it wasn’t. Nevertheless, I felt extremely violated.”

British Twitch creator Sweet Anita last year told The Sun how she discovered “horrific” clips of her image superimposed onto pornographic movies.

Anita, who did not share her real name, said: “You could deepfake anyone. Anyone from any walk of life could be targeted.

“I have never shared a single drop of sexual content in my life, but now they just assume that I have and I must want this.

“It’s not easy to differentiate this from reality. If people see this video in 10 or 20 years’ time, no one will know whether I was a sex worker or this was a deepfake.”

Anita, from East Anglia, added: This will impact my life in a similar way to revenge porn. I’m just so frustrated, tired and numb.”

If people see this video in 10 or 20 years’ time, no one will know whether I was a sex worker or this was a deepfake

Twitch Creator Sweet Anita

Many ordinary women weren’t even aware of deepfakes until earlier this year, when superstar Taylor Swift had AI images appearing to show her in intimate positions shared online.

She is currently waging war on social media bosses after one of the explicit fakes was viewed 47million times on X, previously Twitter, before it was removed.

The Sun can reveal that more than one in ten of the world’s fake video sites operate within the UK. And the problem is growing.

A Channel 4 investigation last month found that some 250 British celebrities had their images digitally doctored among 4,000 online deepfakes.

The probe revealed that the five main sites received 100million views in the space of three months.

A 2023 report by startup Home Security Heroes estimated there were 95,820 deepfake videos online last year — a 550 per cent increase since 2019.

Seven out of 10 porn sites now host deepfake content and research by tech firm Sensity AI found the number of videos doubling every six months.

When The Sun searched one of the biggest deepfake sites, we found digitally altered porn relating to stars such as journalist Emily Maitlis, presenter Maya Jama, plus singers Cheryl Tweedy and Pixie Lott.

According to content detector.AI, 12 per cent of deepfake websites are based in Britain.

They feel violated, humiliated and then, quite often, what happens is they get an awful lot of abuse and harassment from those images being up online

Professor Clare McGlynn

Porn sites are coining in millions of pounds through bogus content, yet it’s frighteningly simple to make a deepfake.

Prof McGlynn, who works at Durham University, said victims were impacted on a daily basis, with some left feeling suicidal.

She added: “They feel violated, humiliated and then, quite often, what happens is they get an awful lot of abuse and harassment from those images being up online.

“It can be devastating and, for some people, potentially life threatening because they have suicidal thoughts.

“There’s the worry that it is going to be shared again and again.”

Hayley Chapman, abuse claims solicitor at Bolt Burdon Kemp, also expressed concern about the potential legal technicality.

If you use an app to create one of these images you will be in the scope of the offence

Victims And Safeguarding Minister Laura Farris

She said: “The requirement to prove intent to cause alarm, distress or humiliation gives websites and social media platforms an excuse not to remove deepfake material — and, likewise, nudity apps a reason to justify their existence — on the basis that it is only produced for fun or the intent is simply not known.”

Victims and Safeguarding Minister Laura Farris told Cathy Newman that the Government was “working through” any loophole.

She said: “The first thing is, if you use an app to create one of these images you will be in the scope of the offence.

“You will be committing a crime, there is no doubt about that.”

The Ministry of Justice stressed anyone who creates X-rated images will feel the full force of the law.

A spokesperson explained: “Sexually explicit deepfakes are degrading and damaging.

“The new offence will mean that anyone who creates an explicit image of an adult without their consent, for their own sexual gratification or with intent to cause alarm, distress or humiliation, will be breaking the law.”

News

ANTHONY JOSHUA Stunned Everyone When He REVEALED The Truth About His Affair With Amir Khan’s Wife.K

ANTHONY JOSHUA Stunned Everyone When He REVEALED The Truth About His Affair With Amir Khan’s Wife Anthony Joshua jokes he wishes he slept with Amir Khan’s wife as he denies Faryal Makhdoom ‘affair’ claim. The former heavyweight champ, 32, was…

“I will destroy you for Mike, HE’S TOO OLD FOR THAT” Roy Jones Jr ready to step in and REPLACE Mike Tyson and face Jake Paul on July 20.k

“HE’S TOO OLD FOR THAT” Roy Jones Jr ready to step in and REPLACE Mike Tyson and face Jake Paul on July 20. Roy Jones Jr. has thrown his name into the mix to replace the injured Mike Tyson in…

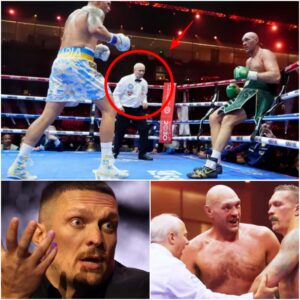

TERRIFYING! Oleksandr Usyk REVEALS SH0CKING Details On DIRTY Referee.K

TERRIFYING! Oleksandr Usyk REVEALS SH0CKING Details On DIRTY Referee In a startling revelation, Oleksandr Usyk, the reigning heavyweight champion, has exposed shocking details about a controversial referee involved in one of his recent fights. The Ukrainian boxing sensation, known for his…

“YOU’RE A COWARD” Paris Fury Felt ASHAMED After Her Husband Tyson Fury Announced His Retirement Following A Humi_Liating Loss To Usyk .K

“YOU’RE A COWARD” Paris Fury Felt Ashamed After Her Husband Tyson Fury Announced His Retirement Following A Humi_Liating Loss To Usyk In a dramatic turn of events, Paris Fury has expressed feelings of shame and disappointment following her husband Tyson Fury’s announcement of his…

‘I Could Easily Beat Mike Tyson In A Boxing Match’: Jake Paul’s Brother Logan Paul Challenge Mike Tyson.K

Jake Paul’s Brother Logan Paul Also Wants To Challenge Mike: ‘I Could Easily Beat Mike Tyson In A Boxing Match’ In the latest twist of events in the world of boxing and entertainment, Logan Paul, brother of controversial YouTuber-turned-boxer Jake…

“I WANT HIM TO REGRET IT” : John Fury Becomes Jake Paul’s Coach After Being Fired by Son Tyson Fury – In a SHOCKING twist in the world of boxing.K

John Fury Becomes Jake Paul’s Coach After Being Fired by Son Tyson Fury: “I Want Him to Regret It” In a shocking twist in the world of boxing, John Fury has officially signed on as the coach for YouTube sensation…

End of content

No more pages to load